Section: New Results

Understanding and modeling users

Participants : Géry Casiez, Christian Frisson, Alix Goguey, Stéphane Huot, Sylvain Malacria, Mathieu Nancel, Thibault Raffaillac, Nicolas Roussel.

Touch interaction with finger identification: which finger(s) for what?

The development of robust methods to identify which finger is causing each touch point, called "finger identification," will open up a new input space where interaction designers can associate system actions to different fingers [11]. However, relatively little is known about the performance of specific fingers as single touch points or when used together in a “chord”. We presented empirical results for accuracy, throughput, and subjective preference gathered in five experiments with 48 participants exploring all 10 fingers and 7 two-finger chords. Based on these results, we developed design guidelines for reasonable target sizes for specific fingers and two-finger chords, and a relative ranking of the suitability of fingers and two-finger chords for common multi-touch tasks. Our work contributes new knowledge regarding specific finger and chord performance and can inform the design of future interaction techniques and interfaces utilizing finger identification [28].

Training and use of brain-computer interfaces

Brain-Computer Interfaces (BCIs) are much less reliable than other input devices, with error rates ranging from 5% up to 60%. To assess the subjective frustration, motivation, and fatigue of users when confronted to different levels of error rate, we conducted an BCI experiment in which it was artificially controlled. Our results show that a prolonged use of BCI significantly increases the perceived fatigue, and induces a drop in motivation [38]. We also found that user frustration increases with the error rate of the system but this increase does not seem critical for small differences of error rate. For future BCIs, we thus advise to favor user comfort over accuracy when the potential gain of accuracy remains small.

We have also investigated if the stimulation used for training an SSVEP-based BCI have to be similar to the one used in fine for interaction. We recorded 6-channels EEG data from 12 subjects in various conditions of distance between targets, and of difference in color between targets. Our analysis revealed that the stimulation configuration used for training which leads to the best classification accuracy is not always the one which is closest to the end use configuration [15]. We found that the distance between targets during training is of little influence if the end use targets are close to each other, but that training at far distance can lead to a better accuracy for far distance end use. Additionally, an interaction effect is observed between training and testing color: while training with monochrome targets leads to good performance only when the test context involves monochrome targets as well, a classifier trained on colored targets can be efficient both for colored and monochrome targets. In a nutshell, in the context of SSVEP-based BCI, training using distant targets of different colors seems to lead to the best and more robust performance in all end use contexts.

Evaluation metrics for touch latency compensation

Touch systems have a delay between user input and corresponding visual feedback, called input “latency” (or “lag”). Visual latency is more noticeable during continuous input actions like dragging, so methods to display feedback based on the most likely path for the next few input points have been described in research papers and patents. Designing these “next-point prediction” methods is challenging, and there have been no standard metrics to compare different approaches. We introduced metrics to quantify the probability of 7 spatial error “side-effects” caused by next-point prediction methods [35]. Types of side-effects were derived using a thematic analysis of comments gathered in a 12 participants study covering drawing, dragging, and panning tasks using 5 state-of-the-art next-point predictors. Using experiment logs of actual and predicted input points, we developed quantitative metrics that correlate positively with the frequency of perceived side-effects. These metrics enable practitioners to compare next-point predictors using only input logs.

Application use in the real world

Interface designers, HCI researchers or usability experts often need to collect information regarding usage of interactive systems and applications in order to interpret quantitative and behavioral aspects from users – such as our study on the use of trackpads described before – or to provide user interface guidelines. Unfortunately, most existing applications are closed to such probing methods: source code or scripting support are not always available to collect and analyze users' behaviors in real world scenarios.

InspectorWidget [26] is an open-source cross-platform application we designed to track and analyze users' behaviors in interactive software. The key benefits of this application are: 1) it works with closed applications that do not provide source code nor scripting capabilities; 2) it covers the whole pipeline of software analysis from logging input events to visual statistics through browsing and programmable annotation; 3) it allows post-recording logging; and 4) it does not require programming skills. To achieve this, InspectorWidget combines low-level event logging (e.g. mouse and keyboard events) and high-level screen capturing and interpretation features (e.g. interface widgets detection) through computer vision techniques.

Trackpad use in the real world

Trackpads (or touchpads) allow to control an on-screen cursor with finger movements on their surface. Recent models also support force sensing and multi-touch interactions, which make it possible to scroll a document by moving two fingers or to switch between virtual desktops with four fingers, for example. But despite their widespread use, little is known about how users interact with them, and which gestures they are most familiar with. To better understand this, we conducted a three-steps field study with Apple Macbook's multi-touch trackpads.

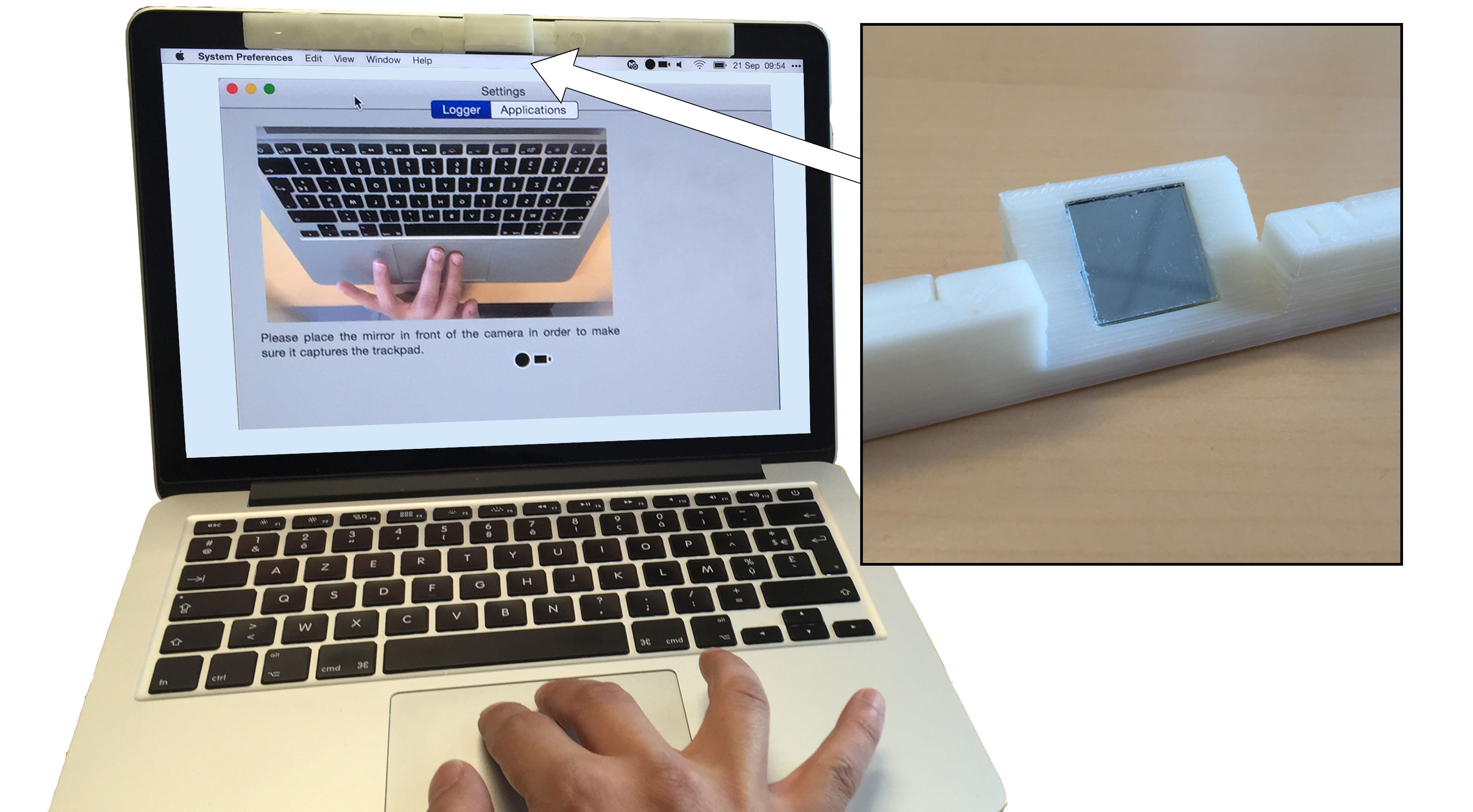

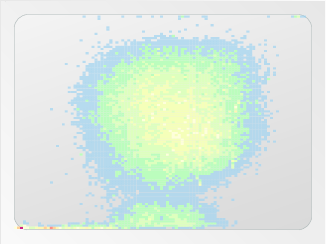

The first step of our study consisted in collecting low-level interaction data such as contact points with the trackpad and the multi-touch gestures performed while interacting. We developed a dedicated interaction logging application that we deployed on the workstation of 11 users for a duration of 14 days, and collected a total of over 82 millions contact points and almost 220 000 gestures. We then investigated finger chords (i.e., fingers used) and hand usage when interacting with a trackpad. In that purpose, we designed a dedicated mirror stand that can be easily positioned in front of the laptop's embedded web camera to divert its capturing field (Figure 1, left). This mirror stand is combined with a background application taking photos when a multi-finger gesture is performed. We deployed this setup on the computer of 9 users for a duration of 14 days. Finally, we deployed a system preference collection application to gather the trackpad system preferences (such as transfer function and gestures associated) of 80 users. Our main findings are that touch contacts on the trackpad are performed on a limited sub-surface and are relatively slow (Figure 1, right); that the consistency of user finger chords varies depending on the frequency of a gesture and the number of fingers involved; and that users tend to rely on the default system preferences of the trackpad [34].